A) 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 2, 2, 1, 2, 1, 4, 1, 1, 1, 1, 2, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 2, 1, 4, 1, 1, 1, 2, 2, 1, 1, 1, 5, 1, 1, 1, 1, 1, 3, 3, 1, 3, 1, 1, 1, 7, 3, 1, 1, 1, 2, 1, 2, 1, 1, 1, 2, 1, 1, 1, 1, 3, 1, 1, 2, 2, 1, 3, 2, 2, 1, 4, 1, 1, 2, 2, 1, 1, 2, 1, 1, 2, 1, 3, 2, 2…

and

B) 1, 1, 1, 1, 1, 1, 1, 2, 5, 3, 1, 1, 1, 1, 2, 1, 2, 1, 3, 1, 1, 1, 1, 3, 1, 3, 3, 3, 4, 1, 2, 1, 2, 3, 1, 2, 1, 1, 1, 2, 1, 1, 1, 3, 1, 1, 1, 3, 3, 2, 3, 3, 1, 1, 5, 4, 3, 2, 3, 1, 7, 3, 3, 1, 1, 2, 1, 1, 1, 2, 2, 1, 2, 1, 2, 3, 1, 2, 3, 4, 1, 1, 1, 1, 2, 1, 5, 3, 1, 1, 3, 2, 3, 1, 3, 3, 4, 1, 4, 1…

What are these sequences, and what links them? Well, if you take the prime numbers in order, and sort each of their digits and then count how many factors each of these numbers has you’ll find either sequence A or sequence B depending on if you sorted the digits highest first or lowest first. The 1s represent numbers which are primes. The first few prime numbers only have 1 digit, so sorting the digits gives the same number, then there is 11, which sorted is still 11, and 13 which can be sorted to 13 or 31, both of which are prime. 19 is the first prime number, which when sorted highest to lowest gives a composite number.

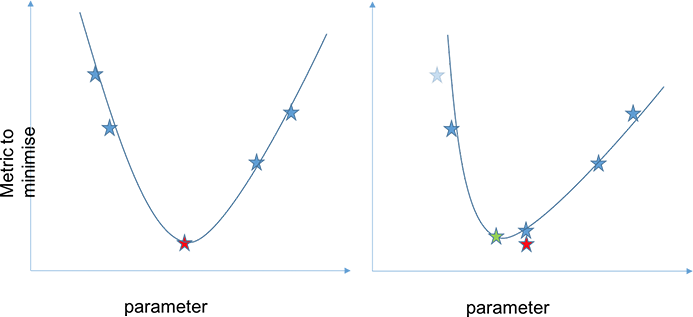

A property of this pair of sequences is that, at least up to 9592 digits, the sum of the first n digits of B is greater than A. This is expected as the intermediate values, the sorted prime digits, of B are 1 larger and 2 more likely to have 0s or 2s at the end of them (since 0 and 2 are both small numbers and guarantee numbers to be composite). Larger numbers are more likely to have more factor since there are more available combinations. I don’t know this result to be true for all N, it is a conjecture.

I’m hoping that the sequences get accepted to the Online Encyclopedia of Integer Sequences (OEIS) which is the goto place for sequences like this to be sorted so that people can cross reference. If they do get accepted then they’ll be sequence A326952 and A326953, respectively. However, the OEIS curates the sequences that they list carefully so as not to include arbitrary sequence which no-one else will find interesting. I don’t know if other people will find this interesting.

Excitingly, as of the 25th of August, they have both been approved https://oeis.org/A326952 and https://oeis.org/A326953